Using Argus

The best way to get started using argus, is to get the argus and client software (button below), compile it on one of your Mac OS X, Linux, Unix or Cygwin enabled Windows systems, and play around with analyzing a few packet streams, to see how it basically works. Usually, just those first steps get you thinking as to how you can use argus to solve one of your problems. If you feel like just starting on one of the basic projects that sites are doing, this page should point you in the right direction.

Argus is supplied as source code, so to really get started you need to compile and install the software onto a ported system. Argus has been ported to all flavors of Linux, and most Unixes that are available, including Solaris. How to compile and install is described in the distribution file INSTALL that is in each package.

Analyzing Packet Files

Argus processes packet data and generates summary network flow data. If you have packets, and want to know something about whats going on, argus() is a great way of looking at aspects of the data that you can't readily get from packet analyzers. How many hosts are talking, who is talking to whom, how often, is one address sending all the traffic, are they doing the bad thing? Argus is designed to generate network flow status information that can answer these and a lot more questions that you might have.

If your running argus for the first few times, get a packet file from one of the IP packet repositories, such as pcapr and process them with argus().

Once you have both the server and client programs and a packet file, run:

argus -r packet.pcap -w packet.argus

ra -r packet.argus

Compare the output of argus() with tcpdump() or wireshark. You should see something completely different. Instead of individual packets, you'll see flow status records. To see an example of the two side by side, here is an example.

Analyzing Network Streams

Many sites use argus to generate audits from their live networks. argus can run in an end-system, auditing all the network traffic that the host generates and receives, and it can run as a stand-alone probe, running in promiscuous mode, auditing a packet stream that is being captured and transmitted to one of the systems network interfaces. This is how most universities and enterprises use argus, monitoring a port mirrored stream of packets to audit all the traffic between the enterprise and the Internet. The data is collected to another machine using radium() and then the data is stored in what we describe as an argus archive, or a MySQL database.

From there, the data is available for forensic analysis, or anything else you many want to do with the data, such as performance analysis, or operational network management.

Once you have both the server and client programs installed, this usually works:

argus -P 561 -d

argus will open the first interface it finds (just like tcpdump), process packets and make its data available via port 561, running as a background daemon.

You can access the data using ratop(), the tool of choice for browsing argus data, like so:

ratop -S localhost:561

Processing Examples

There are a lot of things you can do with argus data. This list of examples is not comprehensive, by any measure, but should get you past the basics and into some interesting projects. If there is anything that you have done, that you would like included in the list, or if there is something that you would like described, and not sure how to go about it, please send email to the developer's list, and we'll add it !!

The first set of examples are demonstrations for using argus and the argus-client programs. This is a work in progress, so if the example you're looking for is missing, send email to the developer's list.

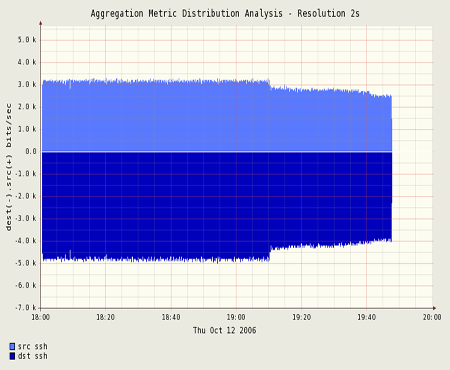

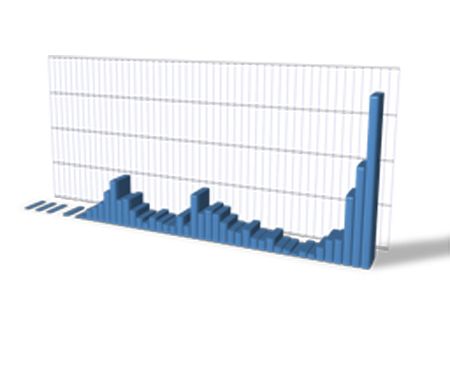

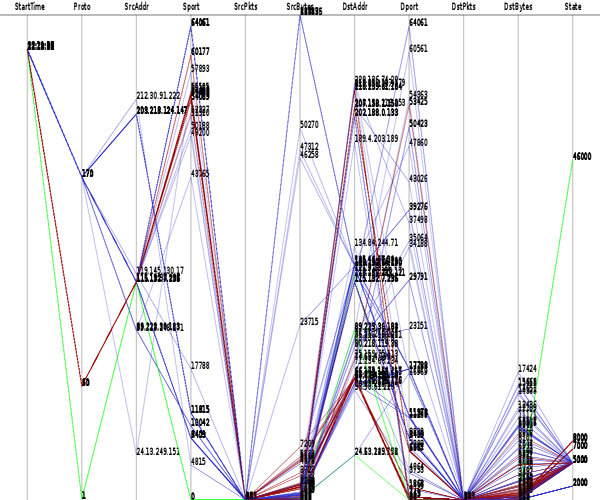

The second set are examples of how to graph argus data using either ragraph(), gnuplot() or other general purpose graphing programs. Some examples will assume some experience with argus data. Hopefully you will find them useful..

Geolocation

There are a lot of definitions for geolocation, but for argus data, geolocation is the use of argus object values for geo-relevant positioning. For pure argus data, the Layer 2 and Layer 3 network addresses that are contained in the flow data records provide the basis for geographically placing the data. For argus data derived from Netflow data, AS numbers can be used to provide a form of netlocation. Additional data that is used to provide relative geolocation are TTL (hops), Round Trip Times, and One-Way Delay metrics. Layer 2 and 3 network address information doesn't provide any sense of where they are, but because Layer 2 and 3 addresses are suppose to be globally unique, at any given moment, there should be a single physical location for each of these objects.

To provide geolocation, such as country codes, or latitude/longitude (lat/lon) information, argus clients use third party databases to provide the mapping between Layer 3 addresses and some geo-relevant information. Argus clients support the use of two free Internet information systems; the InterNic databases, which provide Country Codes, and MaxMind's Opensource GeoIP database, which can provide geolocation for the registered administrator of the domain.

Country codes are fairly reliable, and some IP address location from GeoIP are well mapped, so these free systems are very useful.

Getting the Database

InterNIC Databases

All argus clients can use the databases from the Internic for providing country codes. This support is triggered by simply printing either of the country code designations in the argus record. The database itself is specified in your .rarc fil. This is usually stored in /usr/local/argus/delegated-ipv4-latest. The name is pretty weird, but it follows the convention of how the Internic names its files.

But where did this database come from? A starter file is provided in the argus-clients distribution tarfile, in the ./support/Config directory, but its probably stale when you get it. In the ./support/Config directory, there is a shell script, called ragetcountrycodes.sh. This script uses wget() to retrieve databases from the various internation domain name registries, and merges them together to form our database.

After placing the database file at the PATH specified in your .rarc, printing country codes is available.

MaxMind GeoIP

Argus clients can be configured and compiled to use MaxMind's OpenSource C API libraries, which provide support for using their free and "pay as you go" databases. To enable this support, you need to obtain the GeoIP C API and install it on your system, using the instructions provided. After installation, you configure all the argus clients to use these databases using:

% ./configure --with-GeoIP=yes

% make clean; make

Currently, two (2) programs, ralabel() and radium() provide support for argus record labeling. The Maxmind libraries are configured for the location of the databases, so argus client programs configured with these libraries don't have to have any additional configuraiton support for the information store, they just need to know what kind of geolocation information you're interested in working with.

Ralabel() - Inserting Geolocation Data into Argus Records

Geolocation data maybe relevant for only a short time, and so getting the data into the argus data records is an important support feature. Geolocation data, such as country codes, lat/lon values and AS numbers, have structured storage support, so that you can filter on it, aggregate using the data as aggregation keys, and you can anonymize the information. Use ralabel() and its extensive configuration support to specify what geolocation data will be inserted into each argus record that it encounters. The amount of data added is not huge, but it will have an impact. Most uses of this information involve some form of pipeline processing, where the geolocation data is added at some point in a data pipeline, so that a downstream process can process the records that contain the location data, at which time the data is stripped, prior to storing on disk. This form of "semantic pumping" is a common practice with near real-time flow data processing.

Geolocation data for country codes and AS numbers are structred. We have specific DSR's for this information, the data is stored as packed binary data, and because its in specific DSR's you can filter and aggregate on these values. Other geolocation data such as lat/lon, postal address, state/region, zip and area codes are unstructured. The unstructured data is stored as argus label metadata; ascii text strings with a very simple syntax. These can be printed, merged, grep'ed, and stripped.

To insert geolocation data into argus data, whether its in a file or a stream, you use either ralabel() or radium(). For specific information regarding radium() and record classification/ labeling, please see the radium() documentation. ralabel() has its own ralabel.conf configuration file that turns on the various labeling features, and all the geolocation support is configured using this file. To get a feel for all the features, grab the sample ralabel.conf that came with the most recent argus-clients distribution, and give it a test drive.

In the general case, you will process either "primitive" argus data or you will be processing the data in either an informal or formal pipeline process. Below is a standard strategy for taking an argus file, and labeling both the source and destination IPv4 addresses with geolocation data.

% ralabel -r /tmp/ipaddrs.out -f /tmp/ralabel.conf -w /tmp/ralabel.out

Working with Geolocation Labels

Country Codes

Country code databases are maintained by the Internet Corporation for Assigned Names and Numbers (ICANN). The InterNIC, which maintains the global Domain Name Systems registration functions, is supported by a collection of Regional Internet Registries (RIR) that manage the allocation of IP addresses for their region. These organizations maintain databases of responsible parties for the IP addresses that have been allocated. This data store provides the information for IP address/country code databases.

RIR based country code information is a reasonably good source of geolocation information, at least for locating the person/company that claims to have responsibility for a particular address. The actual physical location of a specific address, however, is outside the scope of what the RIRs are doing. But the information is very useful for many geolocation applications. If you want better information, commercial databases can be more accurate. Many commercial databases, however, simply repackage the RIR databases, so take this information with a "grain of salt", so to speak.

As an example, to generate aggregated statistics for country codes, you need to first insert country codes into the records themselves, using ralabel(), and then you need to aggregate the resulting argus data stream, using racluster(). This will require a ralabel.conf configuration file to turn on RALABEL_ARIN_COUNTRY_CODES labeling. Use the sample ./support/Config/ralabel.conf file as a starting point, and uncomment the two lines that reference "ARIN". Assuming you created the file as /tmp/ralabel.conf, run:

% racluster -M rmon -m srcid smac saddr -r daily.argus.data -w /tmp/ipaddrs.out - ipv4

% ralabel -r /tmp/ipaddrs.out -f /tmp/ralabel.conf -w /tmp/ralabel.out

% racluster -m sco -r /tmp/ralabel.out -w - | rasort -m pkts -w /tmp/country.stats.out

The first command will generate the list of singular IPv4 addresses from your daily.argus.data file. The "-M rmon" option is important here, as that tells racluster() to generate stats for singular objects. The second command labels the argus records with the country code appropriate for the IP addresses. Then we cluster again, based on the "sco" label, and sort the output based on packet count.

The resulting /tmp/country.stats.out file will have argus records representing aggreagated statistics for each distinct country code found in the IPv4 addresses in your data. We limit the effort to IPv4 because the labeling is currently only working for IPv4 addresses. This should be corrected soon.

The resulting output argus record file can be printed, sorted, filtered whatever. So lets generate a report that shows the percent traffic per country. Using ra() we specify the columns we want in the report, and we use the "-%" option to print the columns as percent total. We only need three(3) decimal precision here, so:

% ra -r /tmp/ra.out -s stime dur sco:10 pkts:10 bytes -p3

StartTime Dur sCo TotPkts TotBytes

2009/09/15.00:00:00.901 86402.672 US 9092847 8180857733

2009/09/15.15:26:35.781 6932.988 UA 37323 34939933

2009/09/15.00:01:52.826 85437.820 EU 34853 27606569

2009/09/15.12:08:29.805 41747.223 NO 5338 3510110

2009/09/15.00:01:52.388 85318.320 DE 4374 1960894

2009/09/15.00:01:52.733 85038.320 GB 2063 961983

2009/09/15.00:51:19.951 82470.445 JP 1635 646413

2009/09/15.00:19:22.518 84389.109 SE 1336 500372

2009/09/15.00:00:40.821 85499.008 CA 1233 235801

2009/09/15.00:06:17.104 86020.656 FR 1223 154310

2009/09/15.01:18:37.078 80444.398 KR 1067 74638

2009/09/15.16:35:30.421 7.551 PL 900 336890

2009/09/15.10:44:19.486 41702.855 SI 834 470894

2009/09/15.00:19:22.174 84388.914 NL 596 81189

2009/09/15.09:52:10.142 50289.852 IT 545 360437

2009/09/15.00:19:21.677 85236.164 CH 412 46950

2009/09/15.09:51:57.418 43514.777 AU 396 197180

2009/09/15.09:05:00.989 51842.965 AP 216 35912

2009/09/15.00:06:39.473 81546.859 CN 138 17668

2009/09/15.21:49:08.497 1.763 ZA 80 12868

2009/09/15.01:05:13.283 80195.633 TW 64 9190

2009/09/15.01:22:16.781 57680.957 IE 64 8344

2009/09/15.09:41:22.225 42981.516 IN 48 7456

2009/09/15.00:01:53.272 63859.348 RU 44 5401

2009/09/15.00:01:54.930 0.237 NZ 16 1800

2009/09/15.12:52:03.644 0.196 DK 16 1916

2009/09/15.23:37:05.528 0.577 LU 16 2244

2009/09/15.09:52:27.636 0.141 CS 8 864

2009/09/15.11:39:26.752 0.166 FI 8 1202

2009/09/15.09:43:33.302 0.166 BR 4 458

2009/09/15.09:43:15.856 0.105 BE 4 412

2009/09/15.07:59:15.435 12581.292 MX 4 260

2009/09/15.09:43:39.674 2.363 VE 2 150

2009/09/15.15:08:30.196 2.404 HU 2 150

2009/09/15.11:29:36.073 0.000 CO 1 63

2009/09/15.02:59:00.745 0.000 RO 1 79

2009/09/15.08:33:38.455 0.000 AR 1 418

2009/09/15.08:22:13.605 0.000 ES 1 62

2009/09/15.20:27:30.993 0.000 IL 1 92

2009/09/15.08:30:49.794 0.000 HK 1 62

Autonomous System Numbers (ASN)

AS Numbers are not strictly geographic location information, but rather network location information. Each IP address resides in a single Source Autonomous System, and like country codes, there is geolocation information for the management entity for each AS in many public and commercial databases. Cisco's Netflow records can provide AS numbers for IP addresses, and they can be either the Origin ASN's, or the Peer AS, which is an Autonomous System that claims to be a good route for traffic headed to a particular IP address. Peer ASNs are your next-hop AS for routing. While this information is important for traffic engineering and routing, it is not useful for geolocation of the IP address itself.

We use the MaxMind GeoIP Lite database to provide Origin AS Number values for IP addresses. These numbers can be filtered and aggregated, so that you can generate views of argus data specific to Origin ASNs. The methods above can be used to generate data views for "sas" the source AS number.

Latitude/Longitude (Lat/Lon)

There are a large number of both commercial and public sources of IP Address GeoLocation Information that can provide lat/lon data. We provide programatic support using MaxMind's GeoIP Open Source API's (see above) which provides lat/lon for IP addresses. MaxMind's commercial database has reported excellent quality for this information.

Archives

There are basically two archive strategies that we support with the current argus-client programs. The first is native file system based, where the data is stored as files in the hosts file system. The second is where all the data is stored as a set of MySQL tables. There are advantages and limitations to both mechanisms, and you can use both at the same time to get the best of both worlds.

This page describes issues we're working on for archive mangement and performance. Most of it relates to native file system support.

Time Indexing

One of the important properties of an argus archive is to be able to 'fetch' argus data from the repository quickly. Usually that means getting some or all the data that you have for a given time period, in order to inspect the data for specific events, conditions, etc... Rarely do you want the entire archive, usually you want to know what was happening relative to an event, which has a time associated with it. Last monday around noon, yesterday at 11:14:20.15 am someone logged into a machine from ....., can you tell me .... I tried to access this machine an hour ago, and had some trouble, can you help me?

All of these archive queries have a time scope associated with them. This technology is provided to help you access argus data from your archive with specific time ranges. Our strategy to accomplish this is to generate a time index for argus data files using the utility rasqltimeindex(), and to read the data using the utility rasql().

Native File System Repository

The program for establishing a native file system respository time index is rasqltimeindex().

rasqltimeindex -r argus.file -w mysql://user@host/db

rasqltimeindex() will create a MySQL database table entry for every second that is 'covered' by an argus record in the file, and insert them into the 'Seconds' table in database 'db', creating the table if needed. Each row will have the seconds value, the probe identifier and the byte offsets for the beginning and end of all the records that SPAN the specific second in the file.

If you want to index an entire archive at one time, you can do this:

rasqltimeindex -R /path/to/the/archive -w mysql://user@host/db

This will cause rasqltimeindex() to recursively find all the argus data files in the file system under /path/to/the/archive, and it will index and insert any files that have not already been indexed.

Now, you can also filter the input to rasqltimeindex(), like any ra* program, and generate indexes of specific data, but because there is only one 'Seconds' table per database, the usefulness of this strategy is limited.

Processing Compressed Data

Indexing compressed data is supported. rasqltimeindex() will uncompress the file before indexing, so all the byte offsets will be correct. The filename that is stored in the 'Filename' table will have the compression extension included, and rasql() will test for the existence of both the compressed or uncompressed file when it goes to read the file.

Because rasql() will read the file starting at the specifed byte offsets (thus the performance improvements), rasql() will need to uncompress the file, and leave it in place, to do its file processing. This will require that rasql() have write access to the archive.

Reading Archive Data

Data is read from argus archives that are supported by MySQL based tables, using the program rasql(). When given a time range expression, rasql() will use the tables described above, you just need to provide the -t time range option and the database where the indexes are stored:

rasql -t -10d+20s -r mysql://user@host/db

In this case we're looking for all the records in the database that span 00:00:00 - 00:00:20, 10 days ago. Used in this way, rasql() will search the Seconds table for all the seconds that occur between the start and end time range expression. From that data, it calculates byte offsets of all the files that are in the Filename table, and in table order, it will read all the data in each matching file, apply the same time filter to the records as they are read.

MySQL Database Repository

When the data is being held solely in a MySQL Database Repository, there is no need for a Seconds table index. But, many sites have a hybrid system, where data is held in a MySQL based repository for a time period, like 1 month, and then the data is rolled out of the database into a native file system repository for the remainder of the archive retention period. In this case, you will have a need to quickly access the data regardless of what repository strategy is being used.

rasql() supports time searching this hybrid strategy, and you don't have to worry about it. Lets say that you need to get some data from the archive that relates to a particular metwork, last week April 1st, around lunchtime.

rasql -t 2010/04/01.11:58:00+5m -M time 1d -r mysql://user@host/db/argus_%Y_%m_%d - net 1.2.3.0/24

This takes the time range, and the table naming strategy, and figures out which table(s) from the local argusData database to read. It also looks to see if there is a 'Seconds' table in that database, and if so, it will query the table to see if there are any files that also need to be search. You can write these records to a file, or pipe to other programs, like racluster() to generate a view that you want. See ra.1 for how to specify time filters.

Anonymization

Anonymity is a state of "namelessness", or where an object has no identifying properties. Anonymity in network data is a big topic when you consider sharing data for research or collaboration. There are laws in many countries against disclosing personal information, and many corporate, educational and governmental organizations are concerned about disclosing information about the architecture, organization and functions of their networks and information systems. But sharing data is critical for getting things done, so we intend to provide useful mechanisms for anonymity of flow data.

The strategy that we take with argus data anonymization is that we want to preserve the information needed to convey the value of the data, and either change or throw away everything else. Because data sharing isn't always a life-or-death level issue, not all uses of anonymization require 'perfect secrecy', or 'totally defendable' results. If you require this level of protection, use ranonymize() with care and thought. We believe that you can achieve practical levels of anonymity and still retain useful data, with these tools.

The IETF has developed a draft document on flow data anonymization, draft-ietf-ipfix-anon, which has some opinions and some descritions of some techniques for flow data anonymization. Argus clients should minimally support all of the techniques described in this document. If we are missing something that you would like to see in flow data anonymization, please send email to the list.

ranonymize()

The argus-client program that performs anonymization is ranonymize(). This program has a very complex configuration, as there are a lot of things that need to be considered when sharing data for any and all purposes. A sample configuration file can be found in the argus-clients distribution in ./support/Config/ranonymize.conf. This file describes each configuration variable and provides detail on what it is designed to do and how to use it. Grab this file and give it a read if you want to do something very clever.

By default ranonymize() will anonymize network addresses, protocol specific port numbers, timestamps, transaction reference numbers, TCP base sequence numbers, IP identifiers (ip_id), and any record sequence numbers. How it does that is described below. By default, you will get great anonymization. Great, but not "perfect", in that there are theoretical behavioral analytics that can "reverse engineer" the identifiers, if another has an understanding of even just a subset of the flow data. If you need a greater level of anonymization, you will need to either "strip" some of the data elements, such as jitter and the IP attributes data elements, and/or use the configuration file to specify additional anonymization strategies.

Once you have anonymized your data, use ra() to print out all the fields in your resulting argus data, using the ra.print.all.conf configuration file in the ./support/Config directory, to see what data is left over. If you see something you don't like, run ranonymize again over the data with a ranonymize.conf file to deal with the specific item.

Identifiers That Really Need Anonymization

User Payload Data

Argus has the unique property of supporting the capture of payload data in its flow record status reports. This feature is used for a lot of things, such as protocol identification, protocol conformance verification and validation, security policy enforcement verification, and upper protocol analysis. The feature is completely configurable (by default its turned off, of course) and you determine how much upper layer data you want to capture.

There is only one supported anonymization strategy for User Payload Data, and that is to remove it from the argus record. This argus data element has the potential to contain exactly what most sites are worried about sharing/leaking/exposing, so we aren't going to try to do anything with this data. If you want to preserve it (why on earth would you want to do that?) write your own program.

Time

It maybe surprising that time anonymization comes before other objects, but time is so important to the person wanting to defeat your anonymization strategy, that we should deal with it first. The absolute time and the relative times in argus records should be considered for anonymization, and ranonymize() has lots of support for modifying time, injecting variations in time etc.... By default, ranonymize() will add a constant uSecs offset, chosen at random, to all the timestamps in all the records in an argus data stream or file. This "Fixed Offset" style of anonymization preserves relative time, interpacket arrival, jitter and transaction duration, which in general, are the kinds of things that you need when analysising flow data.

Network Addresses

The next most important objects in argus data for anonymization are the network addresses. Argus has the unique property, currently, of supporting the capture of many encapsulation identifiers at the same time. Argus can have Ethernet addresses, Infiniband Addresses, tunnel identifiers (GRE, ESP spi), etc... With regard, to anonymization, each of these can provide some form of identification. The most important is the Layer 2 addresses that argus can optional contain. These addresses are unique to the endsystem, whether that is a router/switch, a cell phone using Wi-Fi, a laptop or workstation, the ethernet address is the most identifying information in the flow record.

ranonymize() has the ability to anonymize the entire address, or portions of the address, in order to preserve certain semanitcs. Ethernet addresses are interesting in that they contain a Vendor identifier and then a completely unique station identifier. There are situations where you may want to preserve the Vendor ID, say to convey to the recepient of the anonymized data that the flows are going through a Netgear Wireless Router, on one side, and a Juniper Router on the other. But you will still want to anonymize the Station ID.

For IP addresses, which are composed of a Network address and a Host address, ranonymize() supports anonymizing the two parts independantly. This is important because you many want to preserve the Network address hierarchy, i.e. two different IP addresses that are in the same Network, could be anonymized to have the same Network address part, but different Host address parts. We discuss the various strategies for anonymization below.

In any event, all network station identifiers should be considered for anonymization.

Sequence Numbers

The next most important objects in argus data for anonymization are the sequence numbers. Argus records contain a lot of sequence numbers that are copied from the packets themselves. Argus does this to support calculations of loss, but also to aid in identifying network traffic at multiple points in the network. This is, of course, the vary condition that we need to protect ourselves from, so all protocol sequence numbers, such as TCP, ESP, DNS transactional sequence numbers, and even the IP fragmentation identifier, need to be anonymized. This is done by default, and you do have some control over this in the configuration file.

Port Numbers

The next most important objects in argus data for anonymization are the Service Access Port (SAP) numbers. Most SAP's are not identifiable objects. They are well known port numbers or protocol numbers, which are so ubiquitous that having the information is not useful, or they have only local significance. But some port numbers, such as the UDP and TCP dynamically allocated Private Ports are somewhat unique, and should be considered for anonymization.

ranonymize() provides a lot of flexibiliity in anonymizing port numbers, because the port numbers have significance to the receiver of the anonymized data. They want to know what services are being referenced etc...

Argus, Netflow, Flow Tools, Sflow and Jflow

Introduction

Argus now supports reading native Cisco Netflow, JFlow, Flow tools, and we are working on sflow data. Netflow v3-8 are supported, and v9 is being developed. With the basic completion of the IETF's IPFIX WG original charter, there is absolutely no doubt that Netflow V9 will be the prevalent flow data generated by commercial vendors for quite some time.

All argus-client programs can currently read native Netflow data, versions 1-8, and we've started work on supporting Netflow v9. Argus data is a superset of Netflow's data schema, and so there is no data loss in the conversion. Argus data that is derived from Netflow data does have a specific designation, so that any argus data processing system can realize that the data originated from a Netflow data source.

Argus can read Flow Tools data and Junipers's Jflow data ,and an incomplete implementation of Inmon's Sflow flow data support is in argus-3.0.6. If you have an interest in using Sflow data or IPFIX data, send us email.

Reading and Processing Netflow Data

All argus client programs can read Cisco Netflow data, versions 1 - 8, and convert them to argus data streams. This enables you to filter, sort, enhance, print, graph, aggregate, label, geolocate, analyze, store, archive Netflow data along with your argus data. The data can be read from the network, which is the preferred method, or can be read from files that use the flow-tools format, such as those provided by the Internet2 Observatory.

ra -r netflow.file

When reading from the network, argus clients are normally expecting Argus records, so we have to tell the ra* program that the data source and format are Netflow, what port, and optionally, what interface to listen on. This is currently done using the "-C [host:]port" option.

ra -C 9996

If the machine ra* is running on has multiple interfaces, you may need to provide the IP address of the interface you want to listen on. This address should be the same as that used by the Netflow exporter.

ra -C 192.168.0.68:9996

While all ra* programs can read Netflow data, if you are going to be collecting Netflow persistently, the preferred method is to use radium() to collect and redistribute the data. Radium() can collect from up to 256 Netflow and Argus data sources simultaneously, and provides you with a single point of access to all your flow data. radium() supports distributing the output stream to as many as 256 client programs. Some can act as IDS/IPS applications, others can build near real-time displays and some can manage the flows as an archive, which can be a huge performance bottleneck.

All argus records contain a "source id", which allows us to discriminate flow data from multiple sources, for aggregation, storage, graphing, etc.... The source ID used for Netflow v 1-8 data is the IP address of the transmitter.

There are a lot of differences between argus data and netflow data: protocol support, encapsulation reporting, time precision, size of records, style and type of metrics covered. These differences are getting smaller with Netflow v9, but the biggest difference with regard to processing of Netflow data, is the directional data model.

Argus is a bi-directional flow monitor. Argus will track both sides of a network conversation when possible, and report the metrics for the complete conversation in the same flow record. The bi-directional monitor approach enables argus to provide Availability, Connectivity, Fault, Performance and Round Trip metrics. Netflow, on the other hand, is a uni-directional flow monitor, reporting only on the status and state of each half of each conversation, independently. This is a huge difference, not only in amount of data needed to report the stats (two records per transaction vs one) but also in the kind of information that the sensor can report on. There are benefits from an implemenation perspective (performance) to reporting only half-duplex flow statistics, but ..... argus sensors work great in asymmetric routing environments, where it only see's one half of the connection. In these situations, argus works just like Netflow.

Argus-client aggregating programs like racluster(), ratop(), rasqlinsert() and rabins(), have the ability to stitch uni-directional flow records into bi-directional flow records. In its default mode, racluster() will perform RACLUSTER_AUTO_CORRECTION, which takes a flow record, and generates both uni-directional keys for cache hits and merging. The results are "Two flows enter, one flow leaves" (to quote Mad Max Beyond Thunderdome).

When uni-directional flows are merged together, racluster() will create some of the metrics that argus would have generated, such as duration statistics, and some TCP state indications. And now filters like "con" (flows that were connected) and aggregations oriented around Availability (racluster -A) work.

When establishing an archive of argus data, most sites will process their files with racluster() early in the archive establishment, but it is optional. When the data is derived from Netflow Data, the use of racluster() is compelling and should be considered a MUST.

thoth:tmp carter$ ra -r /tmp/ra.netflow.out

StartTime Proto SrcAddr Sport Dir DstAddr Dport SrcPkt DstPkt SrcBytes DstBytes

12:34:31.658 udp 192.168.0.67.61251 -> 192.168.0.1.snmp 1 0 74 0

12:34:31.718 udp 192.168.0.67.61252 -> 192.168.0.1.snmp 1 0 74 0

12:35:31.848 udp 192.168.0.67.61253 -> 192.168.0.1.snmp 10 0 796 0

12:35:31.938 udp 192.168.0.67.61254 -> 192.168.0.1.snmp 1 0 74 0

12:35:31.941 udp 192.168.0.1.snmp -> 192.168.0.67.61254 1 0 78 0

12:35:31.851 udp 192.168.0.1.snmp -> 192.168.0.67.61253 10 0 861 0

thoth:tmp carter$ racluster -r /tmp/ra.netflow.out

StartTime Proto SrcAddr Sport Dir DstAddr Dport SrcPkt DstPkt SrcBytes DstBytes

12:34:31.658 udp 192.168.0.67.61251 -> 192.168.0.1.snmp 1 0 74 0

12:34:31.718 udp 192.168.0.67.61252 -> 192.168.0.1.snmp 1 0 74 0

12:35:31.848 udp 92.168.0.67.61253 -> 192.168.0.1.snmp 10 10 796 861

12:35:31.938 udp 192.168.0.67.61254 -> 92.168.0.1.snmp 1 1 74 78

Audit Systems

Argus fills a huge niche in many enterprise security systems by supporting audit for the network. Network audit was recognized as the key to trusted network enterprise security in the Red Book (NCSG-TG-005), published in the 1987. Network Audit is completely different from other types of security measures, such as Firewalling (mandatory access control) or IDS or IPS, where a system can generate a notification or alarm if something of interest happens. The goal of Network Audit, is to provide accountability for network use. While the Rainbow series is not in the contemporary security spotlight, the concepts that it developed are fundamental to trusted computing.

If done well, Network Audit can enable a large number of mechanisms, Situational Awareness, Security, Traffic Engineering, Accounting and Billing, to name a few. Audit is effective when you have good audit data generation, collection, distribution, processing and management. Argus and argus's client programs provide the basics for each of these areas, so lets get started.

Sensor Deployment

Effective network audit starts with sensor choice and deployment (cover). Sensor choice is crucial, as the sensor needs to reliably and accurately convey the correct identifiers, attributes, time and metrics needed for the audit application. Not every audit application needs every metric possible, so there are choices. We think argus is the best network flow monitor for security. Some sites have complete packet capture at specific observation points, while others have Netflow data a key points in the network. We're going to stay with argus as the primary sensor for this discussion.

Network Based

Network based argus deployment is dependant on how you insert argus into the data path. Argus has been integrated into DYI open-source based routers, (Quagga, OpenWRT) and that can provide you with a view into every interface. But, most sites use commercial routers.

The predominate way that sites insert argus into the network data path is through router/switch port mirroring. This involves configuring the device to mirroring both the input and output streams of a single interface to a monitor port. There are performance issues, but for most sites this is more than adequate to monitor the exterior border of the workgroup or enterprise network. Using this technique, argus can be deployed at many interfaces in the enterprise. But, when building a network audit system, there is a need for some formalisms in your deployment strategy, to ensure that the audit system sees all the traffic (ground truth). If you want to audit everything from the outside to the inside, you need a sensor on every interface that supports traffic that gets in and out. If you miss one, then you don't have a comprehensive audit, you have a partial audit and partial audits are not eventually sucessful for security.

Host Based

Argus has been successfully deployed to most end systems (computers, and some specialty devices, such as Ericsson's ViPr video conference terminal) and this type of deployment strategy is very powerful for auditing the LAN. Many computers support multiple interfaces, and so when deploying in end systems for audit purposes, be sure and monitor all the interfaces, if possible.

Data Collection

For most sites, the audit data will cover the enterprise/Internet border, but for some, it will involve generating data from hundreds of observation points. To collect the sensor data to a central point for storage, processing and archival, we use the program radium(). Radium() can attach to 100's of argus and netflow data sources, and distribute the resulting data stream to 100's of programs of interest. Radium() can simply collect and distribute "primitive" data (unmodified argus sensor data) or radium() can process the primitive data that it receives, correcting for time, filtering and labeling the data.

Using radium(), you can build a rather complex data flow framework, that collects argus data in near realtime. You can also use radium() to retreive data from sensors, or other repositories, on a scheduled basis, lets say hourly or daily. radium() provides the ability to deliver files from remote sites on demand. A simple example of how useful this is, is in the case where you deploy argus on a laptop and have the argus data stored on the laptops native file system. Assuming the laptop leaves campus or the corporate headquarters for a few days, when it returns, the radium() running on the laptop can serve up files from its local repository, when asked by an authorized collector.

For Incident Response organizations, where you receive packet files as a part of the investigation of an incident, you can use rasplit() to insert the argus data you generate from the packet files into an incident specific audit system. Giving each origination site its own ARGUS_MONITOR_ID,and using that unique ID when creating the argus records, you can generate a rich incident network activity audit facility to assist in incident identification, correlation, analysis, planning, and tracking.

Repository Generation

Audit information needs to be stored for processing and historical reference. Argus-clients support two fundamental strategies for storage: native file system support and MySQL. There are advantages and limitations to both mechanisms, and you can use both at the same time to get the best of both worlds.

Native File System Repository

If you are collecting a little data every now and then, or collection huge amounts of data (> 10GB/day) or collecting from a lot of sensors, a native file system respository is a good starting point. A native file system repository is one where all the collected "primitive" data is stored in files in a structured hierarchical system. This approach has a lot of advantages; ease of use, performance, familiar data management utilities for archiving, compressing and removal, etc... but it lacks a lot of the sophisticated data assurance features you find in modern relational database management systems.

The best program for establishing a native file system respository is rasplit() or rastream().

rasplit -M time 5m -w /argus/archive/primitive/\$srcid/%Y/%m/%d/argus.%Y.%m%d.%H.%M.%S -S argus.data.source

Run in this fashion, rasplit() will open and close files as needed to write data into a probe oriented, time structured file system, where the files hold data for each 5 minute time period. Five mintues is chosen because it generates 288 files in each daily directory, which is good for performance on most Unix filesystems, which have major performance problems when the number of files in a directory gets too big. If you don't like 5 minutes, and a lot of people do change this number, go to large chunks, not less.

MySQL Database Repository

The program rasqlinsert() allows you to insert "primitive" argus data directly into a MySQL database table(s). We use this very successfully when the number of flows are < 20M flows/day, which are most working group systems, and many, many enterprise networks.

rasqlinsert -m none -M time 1d -w mysql://user@localhost/argusData/argus_%Y_%m_%d -S argus.data.source

This appends argus data as it's received into MySQL tables that are named by Year_month_day that are in the argusData database. The table schema that is used has ascii columns and the actual binary record in each row that is inserted. The average size of an entry is 500 bytes, in this configuration, and so 20M flows/day will result in 10GB daily tables.

Now, if you want to read data from this repository, you need to specify a time bounds, which you can do by reading from a specific table, or you can provide a time range on the command line. Say you are interested in analyzing the flows seen in the first 20 mintues, from 2 days ago.

rasql -t -2d+20m -M time 1d -r mysql://user@localhost/argusData/argus_%Y_%m_%d

This takes the time range, and the table naming strategy, and figures out which table(s) from the local argusData database to read to provide you with data you're looking for. You can write these records to a file, or pipe to other programs, like racluster() to generate a view that you want. See ra.1 for how to specify time filters.

Primitive Data Processing

The argus audit repository establishes an information system that contains status reports for all the network activity observed. All the utility of the Network Audit is extracted from this information system. From a security perspective, there are 3 basic things that the audit supports:

| 1. | Network Forensics |

| 2. | Daily Custom Anomaly Detection Reports |

| 3. | Daily Operational Status Reports |

Network Forensics is a complex analytic process, and we discuss this topic here. Bascially, the process involves a lot of searching of the repository, to answer questions like "When was the first time we saw this IP address?", "Has any host accessed this host in the last 24 hours?", "Have we seen this pattern before?", "When was the first time this string was used in a URL?". Some queries are constrained to specific time regions, but others encompass the entire repository. The type of network audit respository impacts how efficiently this type of analytic process progresses, and so a well structured Network Forensics respository will use a combination of native file system and RDBMs support. But, for simple queries, such as "What happend around 11:35 last night?" any repository strategy will do very well.

Many sites develop their own custom security and operational status reports, which they generally run at night. These include reports on the numbers of scanners, how many internal hosts were accessed from the outside, reports of "odd" flows, the number of internal machines accessing machines on the outside, the top talkers in and out of the enterprise, to see if a machine starts leaking GBs of data, and any accesses from foreign countries, etc... This type of processing generally involves reading an entire days worth of flow records, aggreagating the data using various strategies, and then comparing the output witha set of filtering criteria, or an "expected" list.

Generally, if you are doing a small number of passes through an entire day's data, a native file system repository is the best architecture. However, you can create a temporary native file system repository from the MySQL database repository, if the time range isn't too large, so which repository you start with is really dependent on how comfortable you are with MySQL, at least that has been my experience.

Archive Management

Network Audit for many sites is a very large data issue, for some a problem. There are sites that collect a few Terabytes of flow data per day, and so archive management is a really important issue. But even if you only collect a few 100 KBs of data per day, archive management is important. A good rule of thumb for security is keep it all, until you can't.

The biggest issue in archive management is size, which is the product of the data ingest rates (how many flow per day are you generating and collecting) and the data retention time (how long are you going to hold onto the data). The more data the more resources needed to use it (storage and processing). While compression helps, it really can cause more problems that it solves. Limiting retention (throwing data away) will limit the usefulness of the archive, so it will be a balancing act.

Compressing Data

We recommend compressing all the files in the archive. All ra* programs can read either bz2 or gz files, and so saving this data space up front is very reasonable. But it does cost in processing to uncompress a file every time you want to use it. Many sites keep the last 7 days worth of data uncompressed, as these are more likely to be accessed by scripts and people during the week, but compress all the rest. Scripts that do this are very simple to build, if you use a Year/month/day style file system structure.

Data Retention

Data retention is a big deal for some sites, as the amount of data they are generating per day is very large. With both data repository types, deleting data from the repository is very simple. Delete the directory for an entire day, or drop the MySQL database table. But many sites want to have staged management of their repository data. Working retention policies have included strategies like: "Primitive" data for 6 months, Daily "Matrix" data forever online. "Primitive" data on CD/Tape for 2 years.

How long you keep your data around determines the utility of the audit system. From a security perspective, the primitive data has value for at least 1 year. Many sites are notified of security issues up to 12 months after the incident. In some situations, such as Digital Rights Management issues, where university students downloaded copyright protected material to their dorm room, and then redistributed the material through peer-to-peer networks, these have been reported 18 months after the fact.

The respository doesn't have to be "online" to be effective, and what is kept online, doesn't have to be the original "primitive" data. These issues in data retention are best determined through site policy on what the data is being retained for, and then the type of data retained can be tailored to the policy.

Some sites are concerned that if they have a repository, someone, such as law enforcement, may ask for it, and they are not prepared for the potential consequences. This is not an issue that has come up yet, for the sites that we are aware of, but if you do decide to have long retention times on your audit archive, consider developing policy on how you will want to share the information.

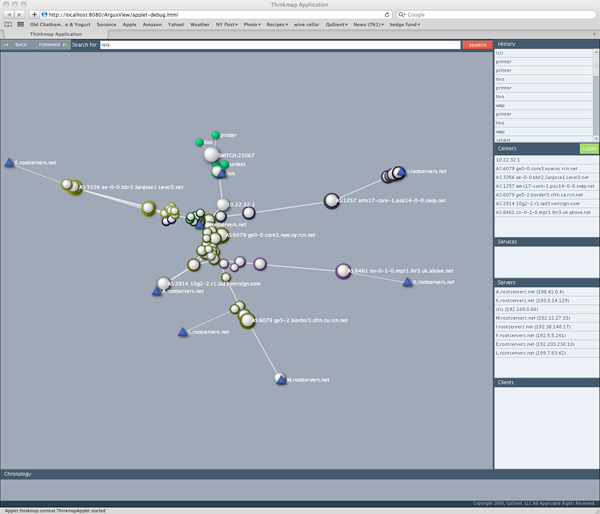

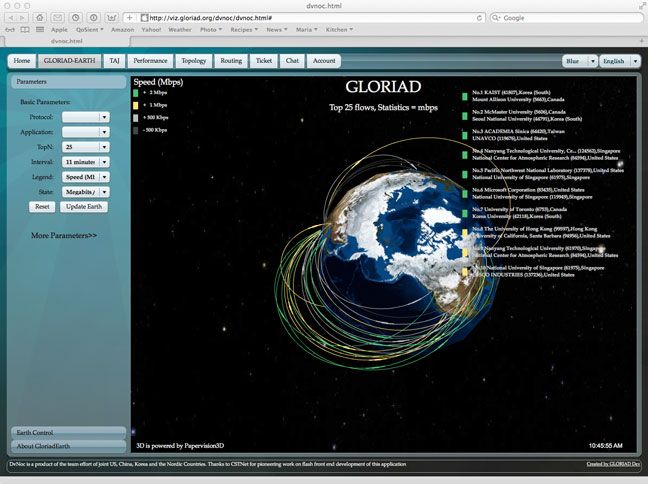

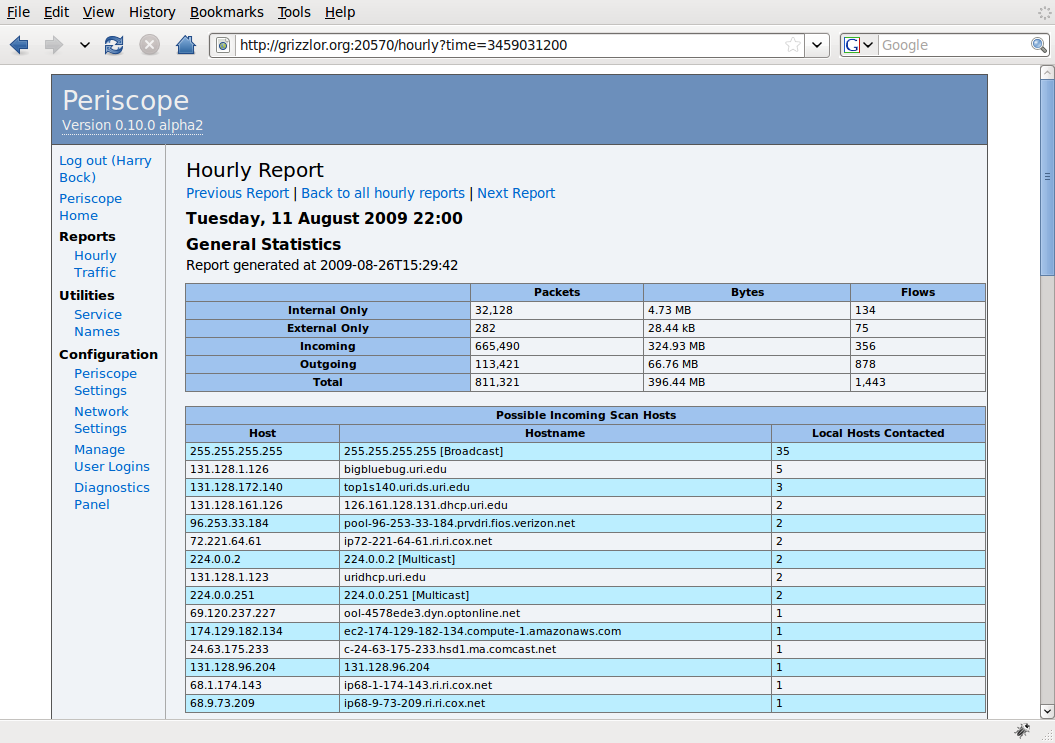

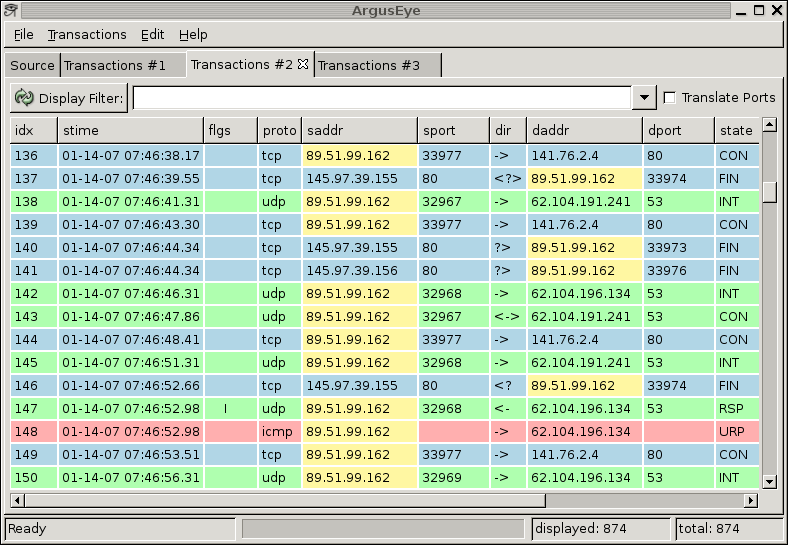

Visualization

There are a lot of topics to present here. These images are links to efforts that use argus data for visualization. Some are references to other web-sites and other projects, so you may wind up somewhere else in the Internet, but hopefully you will find it interesting. Not all of the references are to "active" projects, but as long as the links are working, all do refer to code that is available for implemenation, or are descriptive enough to provide a good example as to what/how people are visualization argus data.

This page is in no way "complete", and more work is coming in the next months. But, please send any suggestions for additional images, and HOW-TO's for graphing and do send any links that have visualizations that you created using argus data.

Each of the pages should be somewhat descriptive.

Database Support

Using network flow data for network operations, performance and security mangaement is a large data problem, in that we're talking about collecting, processing and storing a large amount of data. Modern relational databases management (RDBM) technology works very well to assist in processing and mining all that data. Some thought and engineering, however, needs to be done to get the most benefit.

When using RDBM technology to support flow data auditing and analysis, the primary issue is database performance and data arrival rates. Can the RDBM keep up, inserting the flow data from the sensors and also serve queries against the data? For most companies, corporations and some universities and colleges, using an RDBM like MySQL to manage and process all the primitive data from their principal argus sensors (or netflow data sources) works well. Many database systems running on contemporary PC technology (dual/quad core, 2+GHz Intel, 8-16GB memory, 1+TB disk) can handle around 500-1000 argus record insertions per second, which is around 50-85M flows per day or around 70GB flow data/day), and have plenty of cycles to handle many standard forensic queries to the data, like "show me all the activity from this network address in the last month".

For larger sites, such as member universities of Internet2, where the flow record demand can get into the 20-50K flows per second range, there are databases that can keep up. The STANFORD Isis Project handles this type of load very well, but you are working with commercial systems that need a bit of thought and attention (as a general rule). For ISP's and national level monitoring systems, where the flow record demand can be in the 100K records per second range, databases are not currently capable of handling the transaction rates of the "primitive" data. By distributing the data, you can handle the flow demand, or you can use native file system methods and use RDBM technology to handle the "derived" data, such as the list of IP addresses seen. Hybrid architectures are how large scale organizations make using flow data successful.

Database Support Concepts

The key programs that support the direct use of argus data and databases are radium(), which is used to collect the data and transport it to a central node, and the two MySQL programs, rasqlinsert() and rasql(). These example programs (yes, they are just examples of what you can do), are designed to deal with schema decisions, database and table generation, and provide some simple queries strategies that are relevant to flow data.

rasqlinsert() is the primary RDBM program. It inserts argus data into MySQL database tables, and it tries to deal with most of the issues that come up when inserting data into a database. Flexible table creation? Near real-time insertion? Bulk insertion optimization? Support flexible keying, indexing and schema specification? Aggregate/filter/label records before you insert? Control the record insertion rate to control the impact on the database engine? Multi-threaded to deal with large loads? All of this sounds like some of the improtant requirements. If you see some missing, please send them to the email list, and we'll add them.

Using a database for handling argus data provides some interesting solutions to some interesting problems. racluster() has been limited in how many unique flows it can process, because of RAM limitations. rasqlinsert() can solve this problem, as it can do the aggregation of racluster(), but use a MySQL table as the backing store, rather than memory. Programs like rasort() which read in all the argus data, use qsort() to sort the records, and then output the records as a stream, have scaling issues, in that you need to have enough memory to hold the binary records. Programs like rasqlinsert() can insert the data into a database, and the database can sort the table contents. So hopefully we'll provide a lot of flexibility with these simple techniques.

rasqlinsert()

Getting data into a database table involves a few basic steps. You need to decide what specific data you want to put into the database, how you want the data to be processed (keys, indexes, paritioning, etc...), and then you have to transform the data into a format that the database system likes. rasqlinsert() attempts to handle these tasks with a simple set of configuration and command line options.

The program rasqlinsert() allows you to insert any type of data that is derived from argus data directly into MySQL database table(s). Think of rasqlinsert() as a collection of the basic ra* programs, but with a database backend. It can act like ra(), where it takes in an argus stream or file, and appeands it to a database table. rasqlinsert() can act like racluster() where it generates aggregated representations of the argus data stream, and it inserts the results into a database table. The output stream that rasqlinsert() generates can be directed to database tables using the same methods as rasplit(), such that the database table names can be dynamically controlled, and rasqlinsert() can act like ratop() but with the screen being duplicated to a database backend. Also, rasqlinsert() has a daemon mode, where it can do all of this near real-time database management operations, without having a CURSES based output window.

In this section, we'll describe the simplest operation, appending a live argus data stream to a set of database tables that are explicitly partioned by date. We use this very successfully when the number of flows are < 20M flows/day, which are most working group systems, and many, many enterprise networks.

Argus data inserted into a database table has two types of representation in the database:

1) the original primitive argus record, which is stored as a "BINARY BLOB" called "record".

2) additional fields that are the database table "COLUMNS" or attributes.

The argus record itself can contain up to 148 (at last count) fields, metrics, attributes, objects: far too many fields to expose in a database table, at least if you're worried about performance. But when you insert a record into a database, MySQL can't efficiently deal with "BINARY BLOB" attributes in that its hard to sort, index, select, key the values in the BLOB. So, its generally a good idea to provide some attributes that are inserted along with the binary record so that the database can efficiently work with the data.

The attributes can be any field that ra() can print, and you specify them using the "-s fields" option. Because all ra* programs have a default list of printable fields, rasqlinsert() will use these default fields as attributes in the database, if you don't specify any other list, either in the .rarc file, or on the command line. rasqlinsert() supports two(2) addtional fields, an 'autoid' field, which MySQL understands, and the 'record' field, that provides you the ability to make the BINARY BLOB an optional field.

Because rasqlinsert() is like racluster() and ratop(), it also has a default flow key specified. This keying specification is passed into the database, and so if you don't want keys in your schema, or if you want to change the keying strategy, you'll need to a "-m keys" option on the commandline.

So lets run rasqlinsert() as a daemon, appending records coming from a remote near real-time argus data source to a MySQL database:

rasqlinsert -S argus.data.source -w mysql://user@localhost/argusData/argusTable -m none -d

This will attach to argus.data.source, and append any argus data into the MySQL table 'argusTable' that is in the 'argusData' database, using the 'root' account on the local MySQL database engine. The table schema that is created is composed of the default output fields that ra() uses and field for the actual binary argus record. Here is the table schema that was created:

mysql> desc argusData;

------------------------------------------------------------------------------------------------------

Field Type Null Key Default Extra

------------------------------------------------------------------------------------------------------

stime double(18,6) unsigned NO NULL

flgs varchar(32) YES NULL

proto varchar(16) NO NULL

saddr varchar(64) NO NULL

sport varchar(10) NO NULL

dir varchar(3) YES NULL

daddr varchar(64) NO NULL

pkts bigint(20) YES NULL

bytes igint(20) YES NULL

state varchar(32) YES NULL

record blob YES NULL

------------------------------------------------------------------------------------------------------

12 rows in set (0.00 sec)

This appends argus data as it's received into a single table. The average size of an entry is 500 bytes, in this configuration, and so 20M flows/day will result in 10GB daily tables.

With this type of table, you are storing your argus records in a MySQL database table. No real need for putting them anywhere else, so this could be considered your archive. You can keep the data in this single table, and use "PARTIONING" statements to structure the data based on time. However, if you were interested in an explicit partitioning scheme, you can have rasqlinsert() manage tables based on time, like rasplit() does.

rasqlinsert -S argus.data -m none -d \

-M time 1d -w mysql://user@localhost/argusData/argusTable_%Y_%m_%d

rasqlinsert() will use the "-M time 1d" and the "%Y_%m_%d" directive in the table name to generate MySQL database tables that have the daily date in the table name, and like rasplit(), it will 'split' the argus data records such that no record in the table extends beyound the bounds of the table time, in this case, 1 day.

These are a few simple examples of how rasqlinsert() can append argus data records to tables in a mysql database. Additonal, more complex tasks, will be described at this link (to be constructed).

rasql()

rasql() is a relatively simple program. It performs a SQL "SELECT" query for the "record" field in the specified database table. The "record" field is expected to be a "binary blob" that contains a single binary argus record. If there isn't a "record" field, rasql() quietly exists. After rasql() gets the argus record(s) from the database, it has the functionality of ra(), where it can print, filter, and/or output the records. Specifying the table for reading is done using the "-r url" format, where you indicate that you are looking for data from a "mysql:" database. The table can be explicit, or it can be programmatically derived.

By default, rasql() returns all the "records' from a table. There are a few strategies for "selecting" specific records from the table. There is a specific option for you to provide a "WHERE" clause to the SQL query, using the "-M sql='clause'" option. Using this option, you can use MySQL to select specific records from the table. When this opion is used, rasql() provides the "WHERE" and it takes your string and appends it. If there already is a "WHERE" clause being used, rasql() will append "AND " and then your phrase to the "WHERE" clause that will be used in the query. (so don't put in the "WHERE"). This is important if you are using a "-t start-stop" filter on the command line, as that will attempt to provide a "WHERE" clause, if the database table has a time field in the schema.

Now, for an example. Say you are interested in getting the flows seen from 00:00:00-00:12:00, but 2 days ago, from a specific IP address, 1.2.3.4. Lets assume that the data is stored in a set of tables that have the date in the table name, and the table schema contains both source and destination IP addresses, 'saddr' and 'daddr' respectively. This should work:

rasql -t -2d+20m -r mysql://user@localhost/argusData/argus_%Y_%m_%d \

-M sql="saddr='1.2.3.4' or daddr='1.2.3.4'"

This takes the time range, and the table naming strategy, and figures out which table(s) from the local argusData database to read from, to provide the "record" data you're looking for. The "-M sql="clase" option uses the 'saddr' and 'daddr' attributes which are ASCII strings. You can write these records to a file, or pipe to other programs, like racluster() to generate a view that you want. See ra.1 for how to specify time filters.

Filters

Argus and argus clients each support many types of filtering. Argus uses BPF packet filtering to control its input packets, when needed, and Argus and its client programs all support flow record fitlering either on input or on output. These two filtering systems are very important to building a successful network activity sensing infrastructure.

Argus packet filtering and flow record filtering share a lot of syntax and semantics, however, they are very different. Packet filtering refers to the ability to make a selection choice based on a packets contents, and is generally stateless; relaying solely on packet identifiers, semantics and abstractions. Flow filtering, on the other hand, is based on the semantics and attributes reported in flow records. Flows represent the behaviors and attributes shared by a set of packets, and in the case of Argus, bi-directional packets, so there are a number of abstractions and semantics that aren't relevant to packets, such as connectivity, availability, round trip times, loss, average rate and load.

A great example of how packet filter semantics differ from flow semantics is the concept of " Source " and " Destination ". The source and destination of data packet is pretty straightfoward, but what is the source of a bi-directional connection oriented flow? Flows share many packet attributes such as " bytes " or "TTL" or " Type of Service " markings. But int he case of" 'Bytes ", a packet's bytes represent the specified bytes within a single packet. A flow's bytes represent the total length, or the sum of the portions of the total number of packet observed for the flow. be " total bytes ", " last packet bytes ", but packets represent only a single packet, and flows generally report on multiple packets, so a flow filter may support a phrase like " src pkts gt 10 ".

All of this should help you to realize that Argus flow filters are completely different from packet filters.

Argus has used the libpcap packet filtering strategies as its guide for the last 19 years. Argus uses libpcap's filter engine from the libpcap library for its packet filter., which has supported easy porting of Argus to over 20 platforms, and counting. For flow filtering, argus and argus-clients have used a variation of the compiler that was originally written for libpcap, to provide flow filtering. This compiler has evolved over the years, but much of the structure of the argus flow record compiler and the lexical analyzer are inspired by the BSD Packet Filter, so thanks !!!!

Argus Filters

Input Packet Filtering

Argus uses a libpcap packet input filter to control its input stream. Argus based network activity monitoring is intended to monitor and account for everything on the wire, but input packet filtering is important for many types of network monitoring and surveillance. Issues such as sensor performance and monitor authority tend to bring input packet filtering into play.

For detailed explanations of the argus packet filter, we refer to the tcpdump filter documentation.

The Argus packet input filter is generally specifed in the /etc/argus.conf configuration file, or on the command line. The input filters are applied to the packet stream read from the specified physical or virtual interfaces. If provided on the command line or specified using the /etc/argus.conf 'ARGUS_FILTER' variable, the single filter is applied to all the packet input Argus will be reading from during the monitoring session. But, because Argus can support multiple simultaneous observation domains that relay on complex multi-interface packet sources, packet filters can be specified for each interface, in each role.

While these can become quite complex, and at times difficult to debug, the ability to support multiple filters at varying stages in the packet processing pipeline, has proven to be a powerful approach to digital network monitoring.

Output Flow Record Filtering

Argus can be configured to write out its flow record stream to a number different push and pull based transport strategies, and argus flow record filters are used to specify how that filtering will be done. These filters specified in detail in the ra.1 man page.

In addtion to argus configured filters, when a ra* client, such as radium, or rasplit, attach to an argus, it will send its " remote " filter to argus to specify what types of records should be sent. This is an optimization, to minimize the number of records sent to the client.

This defines the argus system two tiered filter stratgegy, where argus supplied filters are used to define the output stream, and client supplied filters are then applied as a second stage filter to control the offered load. This strategy is also used by radium.

Argus Client Filters

All argus clients support a 3-tiered argus flow record filter architecture.

% ra -S remote.argus - tcp and net 2.3.4.0/24

With this call, ra will compile the filter expression locally, and if it is correct, it will then send to the argus data stream source ' remote.argus ' the same filter expression. Before the remote.argus data source will begin transmitting data, it will compile the filter. Any compiler errors will be reported back to the client. This is important, as the remote.argus may not the most recent version and may not support the filter that is being presented.

% ra -S remote.argus - local tcp and net 2.3.4.0/24

In this case there will not be any remote filtering, and all the filtering will be done on the client side. For many installations, this is fine, but definitely not optimal.

Flow Tools

Argus clients now support a complete set of functions and operations on flow-tools data, when reading streams and files. By specifying that the input format is the flow-tools format, argus clients can read the netflow and juniper flow records, convert them to argus record formats, and then operate on that data, using the argus client tool set.

To turn on this support, you need to have a working flow-tools library, that the argus-cients ./configure can find. There are options in ./configure for specifying where to find the flow-tools library that you would like to use.

Argus clients attempt to provide all the data processing and analytic capabilities of the flow-tools packages, through its own client programs. However, if there is a function that you discover is missing, please notify us through the argus developers mailing list, and we'll add that support.

Enabling Flow Tools Support

Download Flow-tools Library

The latest flow tools distribution can be downloaded from this link at Google Code. You will need to unbundle the distribution, make it, and then install the libraries, or provide the path to the generated libraries in the ./configure of the argus-clients distribution.

Link Flow-tools to Argus-clients

If you are configuring and compiling your argus-clients from source code, the ./configure script will attempt to find a usable flow-tools library, in system and local standard installation target directories, as well as the parent directory that the argus-clients distribution resides. When it finds a suitable distribution, argus-clients will automatically enable the use of "ft:" as a file type specifier.

You can tell ./configure where the copy of flow-tools library is using the --with-libft=DIR option:

% ./configure --with-libft=/path/to/my/flow-tools-directory

Basic Flow Operations on Flow Tools Data

Once the argus-clients distribution has been linke to a suitable flow-tools library, reading flow tools data involves specifying the flow data type in the "-r" option. By writing the file out, the flow-tools data will be converted to argus flow data.

% ra -r ft:flow-tools-data.file -w argus.file - src host 2.4.1.5

Once converted to argus-data, the flows can be processed in any number of ways. To process the files without conversion, simply read the data using the appropriate ra* analytic program, using the "-r ft:" specifier. To generate a CSV file with your own basic fields (specified in your .rarc file):

% ra -r ft:flow-tools-data.file-c , - src host 2.4.1.5

Flow-Tools / Argus-Clients Capabilities

The argus-clients package includes a set of core client programs that map well to features in the flow-tools distribution. These features include printing, processing, sorting, aggregating, tallying, collecting, and distributing flow data. Here we provide basic examples of how to use these argus-client utilities; ra, rabins, racluster, racount, radium, ranonymize, rasort and rasplit, to provide flow-tools features.

| flow-capture |

rasplit |

rasplit provides most of the funcitons of flow-capture, with the exclusion of providing big-endian / little-endian conversion support, and archive file expiration. Additional programs provide this capability. |

|---|---|---|

|

flow-cat |

rasort |

all ra* programs can provide the flow concatentation feature of flow-cat, supporting the time filtering, but "rasort -m stime" provides flow-cat's "-g" option to sort the output by time. rasort does not provide integrated compression output. |

|

flow-dscan |

radark, raports, rahosts |

The flow-dscan analytic to detect suspicious activity, such as port scanning and host scanning is covered by a large number of argus analytics, such as radark, rahosts, raports. However, the argus-clients approach to suspicious activity is not the same as flow-dscan, so it may not be a good fit. |

|

flow-expire |

|

argus-clients provides archive management software, such as flow-expire, through it mysql support. Simple file crawling and deletion, archive, etc... have been discussed on the argus mailing list. |

|

flow-export |

ra, raconvert, rasqlinsert |

All argus-client programs can write flow data into a number of output formats, especially ASCII, CSV, XML. Database support is currently provided by a separate set of database programs. |

|

flow-fanout |

radium, ranonymize |

Radium is the argus-clients collection and distribution system that provides all the properties of flow-fanout, except flow data manipulation (-A AS0_substitution and -m privacy_mask). Other programs provide these functions. |

|

flow-filter |

ra, rapolicy |

All argus-client programs support the same filtering capabilities, which are a superset of the flow-filter filters. To provide the "-f acl_fname" functions, use rapolicy. |

|

flow-gen |

|

The argus web site provides a number of flow data files for test purposes. |

|

flow-import |

raconvert |

raconvert reads ASCII CSV files and converts them to argus data. |

|

flow-mask |

racluster, ranonymize |

racluster, the argus-clients aggregation utility is used to modify the flow key attributes to match some level of abstraction, without losing any of the data charateristics. If the purpose, however, is to anonymize data, use ranonymize. |

|

flow-merge |

rasort |

All argus-client programs can merge flow files together, however to control the output so that its interleaved, use rasort -m stime. |

|

flow-nfilter |

ra |

All argus-client programs can filter records based on a complex filters, which can be provided on the command line or in a rarc file. Argus-clients do not yet support the "-v variable binding" option, however. |

|

flow-print |

ra |

All argus-client programs can print the contents of the records it processes, using a free format strategy. |

|

flow-receive |

ra |

All argus-client programs can "receive" flow-tools data records. |

|

flow-report |

|

argus-clients does not provide a specific flow-report function, but the argus-clients distribution provides a number of bash, sh, and perl example programs that generate reports. |

|

flow-send |

ra, radium |

All argus-client programs can "send" argus flow data to collectors, however, radium is the ra* program of choice |

|

flow-split |

rasplit |

rasplit provides all the capabilities of flow-split, with the additional features of spliting data based on flow record content. |

|

flow-stat |

racluster, racount, raports, rahosts, ra..... |

argus-clients does not provide a single program to provide the large number of reports that flow-stat generates. racluster, however, will generate many, if not most, of the data that flow-stat generates, through its general aggregation mechanisms. The distribution does provide a number of programs, like racount, raports, rahosts, that do provide similar information. |

|

flow-tag |

ralabel |

ralabel provides for a free form metadata label per flow record that provides all the capabilities of flow-tag, including filtering and aggregation support for the generic labels. |

|

flow-xlate |

ranonymize, raconvert |

ranonymize is the principal argus data field manipulation utility, however, many flow-xlate functions can be provided using raconvert and sed. |

Routing

Analysing Routing Protocols

Argus has defined flow abstractions for control plane protocos, such as routing (ISIS) and MPLS based LSP establishment (RSVP). This support is designed to provide enhanced operational status and performance of the control plane of large networks. In this first round of control plane monitoring, argus tracks the ISIS protocol, reporting metrics for ISIS hello's, adjacency establishment and status, and argus tracks individual ISIS link state advertisements. This strategy allows for argus to support detailed analysis of ISIS operations and performance. The goal is to provide the information needed to drive a complete operations, performance and security function for infrastructure control.

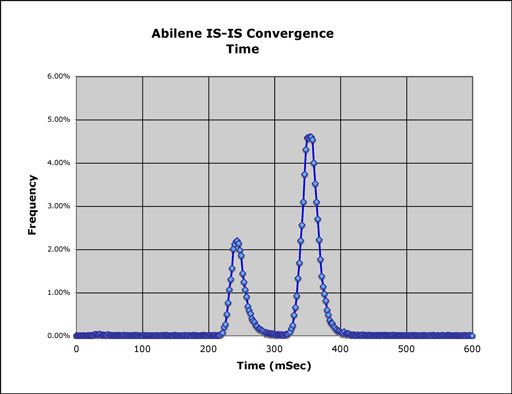

Argus and its client programs can be used to do simple analytics on routing performance, such as convergence analysis for a complete routing infrastructure. Using Internet2 ISIS packet traces, taken from key points in the Internet2 network, argus can generate metrics for the life of individual link state advertisements in the total network. Here is a simple graph for time to convergence for 66,211 link state advertisements, which are all the advertisements for one month last year, for 4 observation points in the Internet2 architecture.

This is done by running argus against the ISIS packet capture files, providing a unique srcid for each of the packet files (as they represent independent observation domains), and then running racluster() against the LSP flows that are in the file, removing the "srcid" from the flow key. This causes racluster() to merge all the matching Link state advertisement flows from all the observations domains into a single flow record. This record represents the life of the individual LSA in the ISIS network. Internet2 has done a great job with this data, because the timestamps on the packet captures are synchronized to within a few microseconds. Below we graph all the flow durations for the aggregated LSA flows.

Sensor Performance

Monitoring modern computer links is not a trivial task. With commerical IP services now performing at 40Gbps, and 10Gbps becoming common place, the computational demands of "keeping up" can be high. For Argus to perform well, it will need some good computational resources. This section will present what we know about hardware and configurations that should get you going pretty well.

Getting Packets - Network

Lets start at the link being monitored and talk about getting access to the data on the wire, preferably without impacting that data :-). The options are (more less from cheapest/worst to best most expensive):

Multiport Repeater

By definition only works on half duplex links, are hard to find these days (switches which are much more common won't work) and limited to 10/100. Works fine on an ADSL line and at low link speeds to tap in to the argus sensor to archive host data flow for trouble shooting (a copper tap will do this better but you need an fdx sniffer then).

Span Port on a Network Switch