ARGUS + ML

Argus and Machine Learning

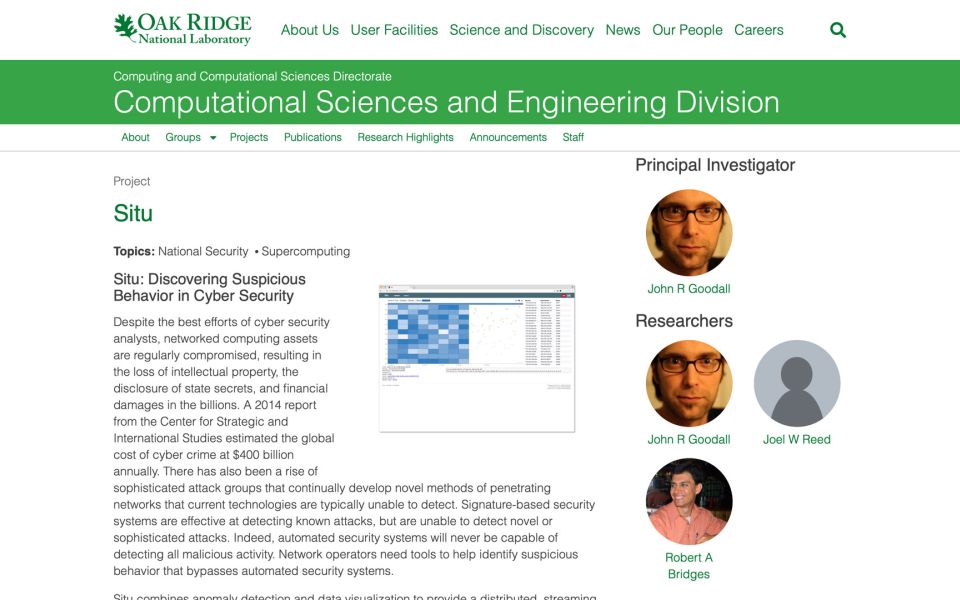

The Argus system is the network data source of choice for many prominent Machine Learning (ML) Network Intrusion Detection and Anomaly Detection projects. Unsupervised learning using network flow data has been an active research topic for many years, and organizations like Oak Ridge National Lab (ORNL) have had great results using Argus data in their operational system, SITU.

The very large data capabilities, rich data models, flexible data formats, high performance data generation and processing, metadata enhancement capabilities, streaming and block processing strategies, and technical maturity all come together to provide an environment where successful ML and AI models can be developed, tested, optimized and deployed.

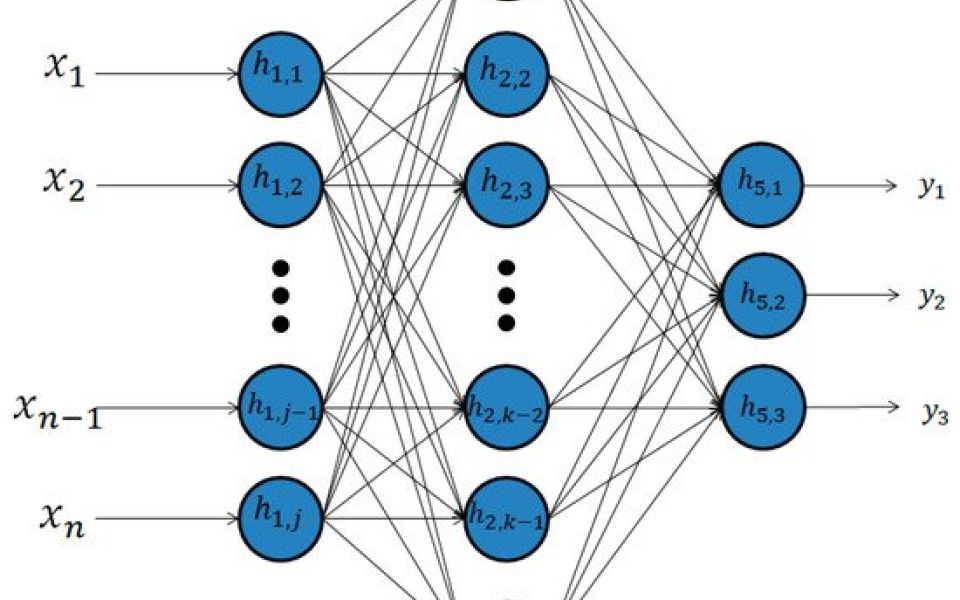

Having massive amounts of non-statistical, historical, transactional network activity data is really important for ML model development and training. Having a lot of attributes associated with the data, is a key component for ML deep learning. Argus is famous for being one of the first large data sources of network data, well structured, with the right kind of attributes that allow ML to "peek" into network state and condition, in real-time ... data that is actually useful and reliable.

Deploying ML in operational networks, requires a stable and reliable network data generation and processing platform. Argus provides the most mature streaming network situational awareness capability available, providing guarantees on data timeliness, order and state, making it a natural choice for ML.

ML Concepts

ML and networking have been an interesting pair for a few decades now, and a number of basic concepts have emerged that will help the Data Scientist to approach the complexities of this topic. The more data the better. Non-statistical approaches yield the best prediction results. Designed data, rather than trying to make do with the data that is lying around, is key to successful ML solutions.

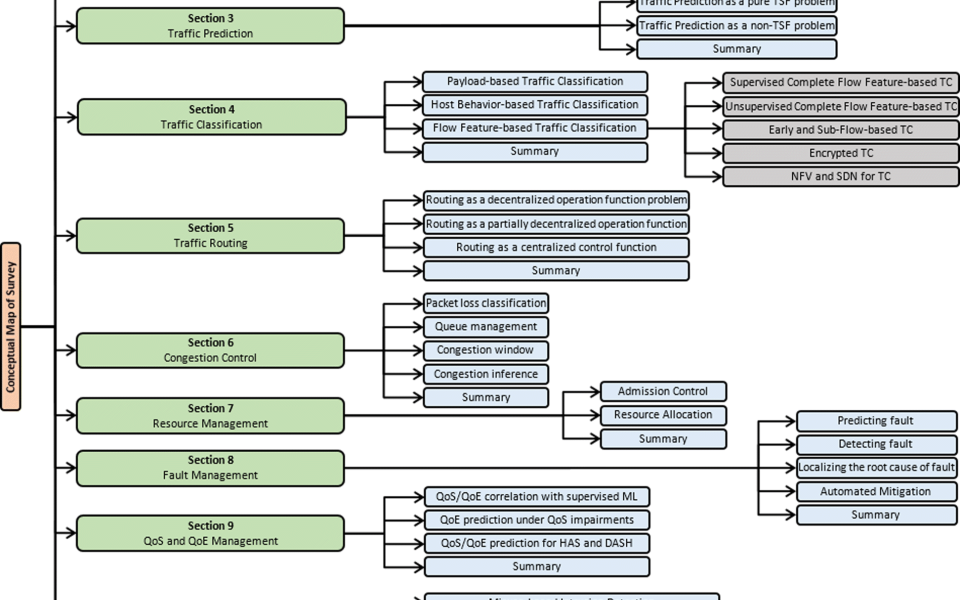

Data generation, collection, feature engineering, establishing ground truth, model development and validation, model optimization, deployment are all complex concepts that must be addressed when considering an operational ML approach to any problem. Of the four broad categories of problems that can leverage ML, the Argus Project focuses on clustering, classification and regression of network traffic flow-features. Because Argus has been used in many ML network research projects, it is a proven network traffic data set that can deliver predictable and reliable results.

In the literature, there are a lot of papers where ML has been applied to network traffic prediction, classification, routing, operations, performance and network security. The source of data is either packets (packet-level features) or network flow data (flow-level features).

Argus is used in the UNSW-NB15 Dataset which is the most frequent used standard data set for network based Machine Learning studies, because of its rich data features.

Traffic classification dominates the network ML literature and Argus data is designed specifically for flow feature-based, as well as early and sub-flow-based traffic analytics and classification. Argus data also contains sampled payload data, so it is great for doing most payload-based traffic classification, especially encrypted traffic classification. And because Argus can be deployed in end-systems and embedded in networking devices, it is perfect data for doing host behavior-based traffic classification as well as NFV / SDN based classification. ML for network security leverages traffic classification and focuses on traffic anomaly detection and hybrid intrusion detection.

Argus ML Projects

Argus is at the core of a number of prominent unsupervised ML projects at US National Laboratories, Universities and private companies. These projects provide a glimpse of how Argus data can be used in large scale operations to provide detection and protection for important assets.

In partnership with Purdue University, we integrated Argus into the NOS satellite simulation environment to drive a Wasserstein Generative Adversarial Network (WGAN) model to act as a prototype behavioral anomaly detection system. We'er introducing the python code to enable this system in Argus 4.0.

Argus ML Environments

The technology needed to do effective Machine Learning for network based anomaly detection, involves developing / supporting a set of environments for the Data Scientists that support the whole ML life cycle. We're working on Argus data processing in Python, R, Matlab and Mathematica.

Getting data into the platform is just one step in the process, and many use CSV and JSON, both of which are supported in Argus. But getting streaming data into the platform can be complex and difficult for some applications, and getting that data in for a 100G network can be very challenging.

If there are other basic environments that you need, please give us a holler.

Argus ML Datasets

We believe that the most important part of the ML-OPS life-cycle involves processing historical data, for feature extraction, behavioral classification development and training.

There are a number of existing datasets available for network AI/ML research that are based on Argus data or use Argus to provide key features of the dataset.

UNSW-NB15 was developed by the Australian Centre for Cyber Security (ACCS) and have been used in 1000's of research papers.

The BoT-IoT dataset was created by designing a realistic network environment in the Cyber Range Lab of UNSW Canberra. The network environment incorporated a combination of normal and botnet traffic.

WUSTL has developed several dataset, including IIOT and EHMS 2020, which are derived from specific network testbeds.

And of course, you can develop your own datasets using Argus data and the argus clients.

Argus ML Examples

There seem to be two basic strategies for effective ML assisted network classification, and these are based on whether the ML is integrated into a live streaming analytic or whether its applied to collected data. We believe that the most important part of the ML-OPS life-cycle involves processing historical data, for feature extraction, classification and training.

Title

Title